Week 5

What I Learned

Gaussian Splatting Detail Capture

Tested micro detail capture capabilities using Sharp AI

Explored SuperSplat for optimization and visualization

Discovered that high-detail captures are feasible with proper optimization techniques

Dynamic 3D Gaussians (4D Reconstruction)

Found multiple GitHub implementations for dynamic scene reconstruction:

Dynamic3DGaussians by JonathonLuiten (primary option)

4DGaussians by hustvl

SpacetimeGaussians by oppo-us-research

MoDGS for monocular input (single camera reconstruction)

Learned these can capture movement and temporal data, opening simulation possibilities

TouchDesigner Integration

Discovered TouchDesigner has a community Gaussian splatting plugin

Found free motion tracking plugin available for TouchDesigner

Explored TouchDesigner Gaussian splat integration examples

Confirmed TouchDesigner can work with Gaussian splat data in real-time workflows

Blender Integration

Identified Blender has Gaussian splat support for workflow integration

Triangular Splatting

Identified as a GitHub resource to explore (alternative rendering technique)

To Do Next Week

Primary Goals:

Implement Dynamic 3DGS

Clone and test the Dynamic3DGaussians repository

Compare with 4DGaussians and SpacetimeGaussians implementations

Determine which best fits your VFX pipeline needs

Motion Tracking → Gaussian Splat Transform Pipeline

Implement a system where motion tracking data controls/transforms the Gaussian splat

Test real-time camera movements affecting splat positioning and orientation

Explore whether tracked motion can drive splat deformation or camera navigation

Explore Triangular Splatting

Research triangular splatting GitHub repos

Compare rendering quality/performance vs traditional Gaussian splatting

Secondary Goals:

Continue detail capture testing with different subject matter

Test Blender integration for potential VFX compositing applications

Original Image

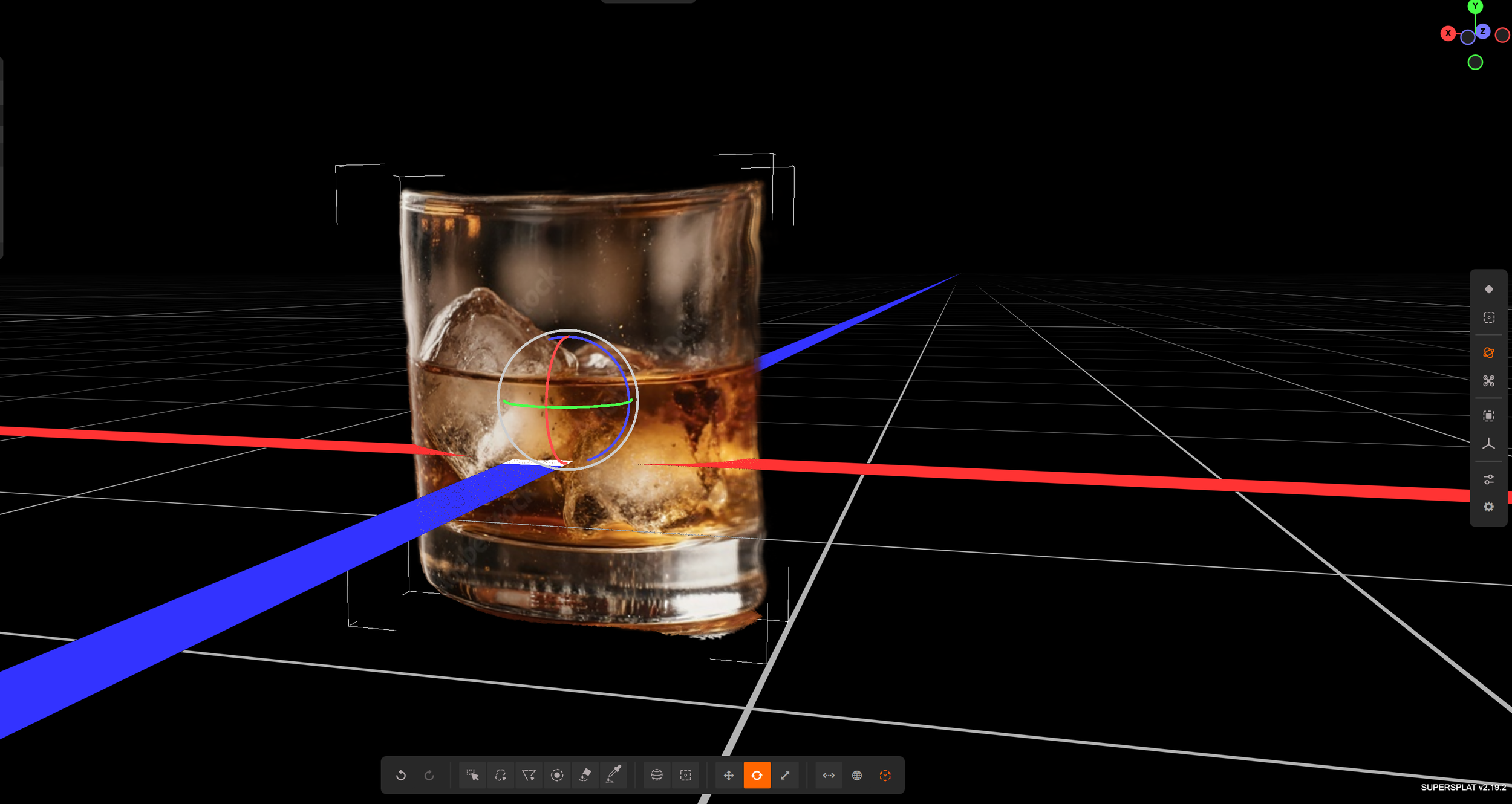

SuperSplat

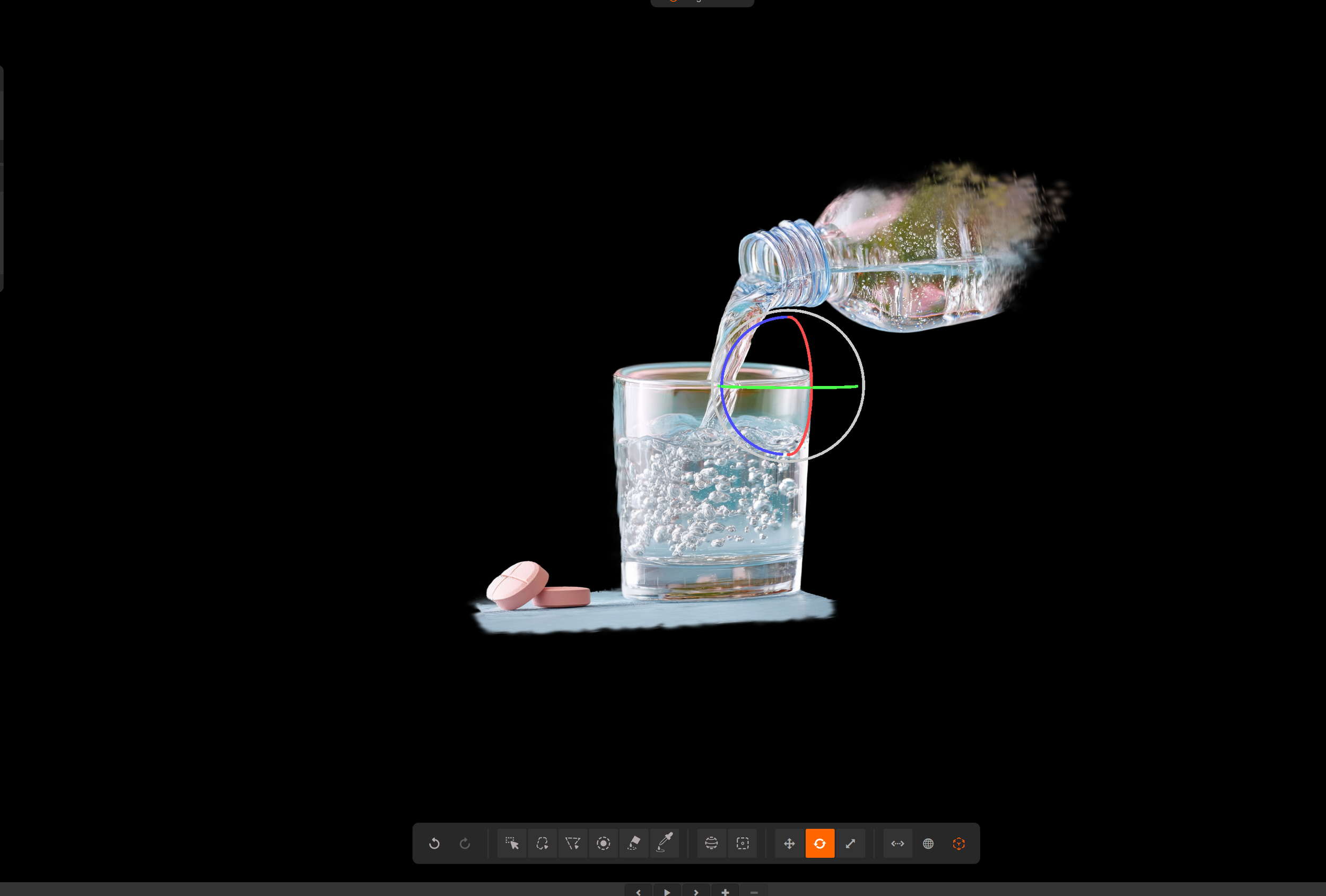

Original Image

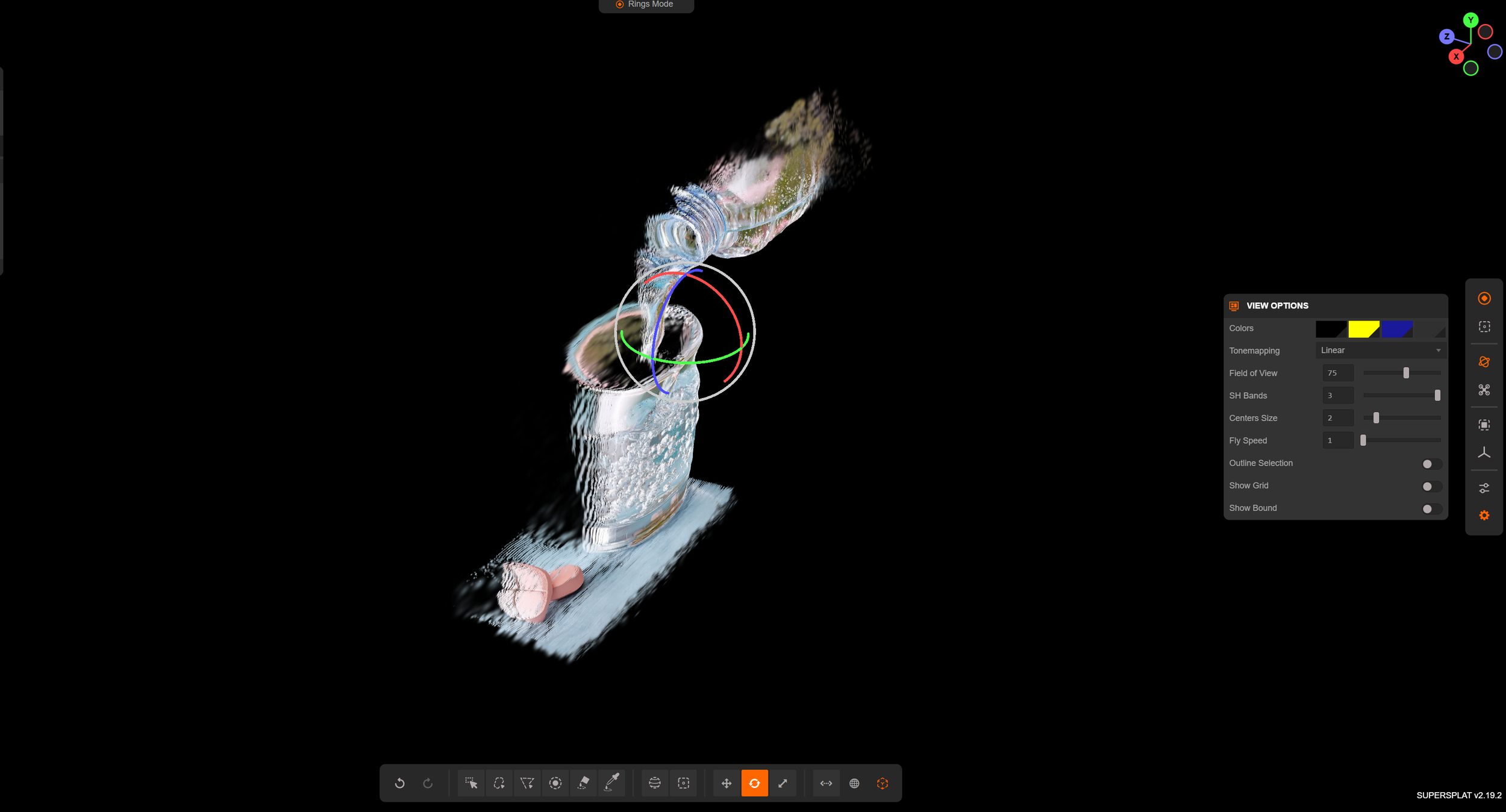

Supersplat Optimization

Original Image

Supersplat Optimization

Original Image

Supersplat

Dynamic 3DGS Example:

Simulation Possibilities

https://github.com/JonathonLuiten/Dynamic3DGaussians

Other options:

https://github.com/hustvl/4DGaussians

https://github.com/oppo-us-research/SpacetimeGaussians?tab=readme-ov-file#installation

Monocular Input Github:

https://github.com/MobiusLqm/MoDGS?tab=readme-ov-file

Blender Gaussian Splat Example File:

Takeaways:

Most Dynamic 3D Gaussian Splats need multiple cameras

The Monocular Splat Github has a Pytorch error I still have to fix

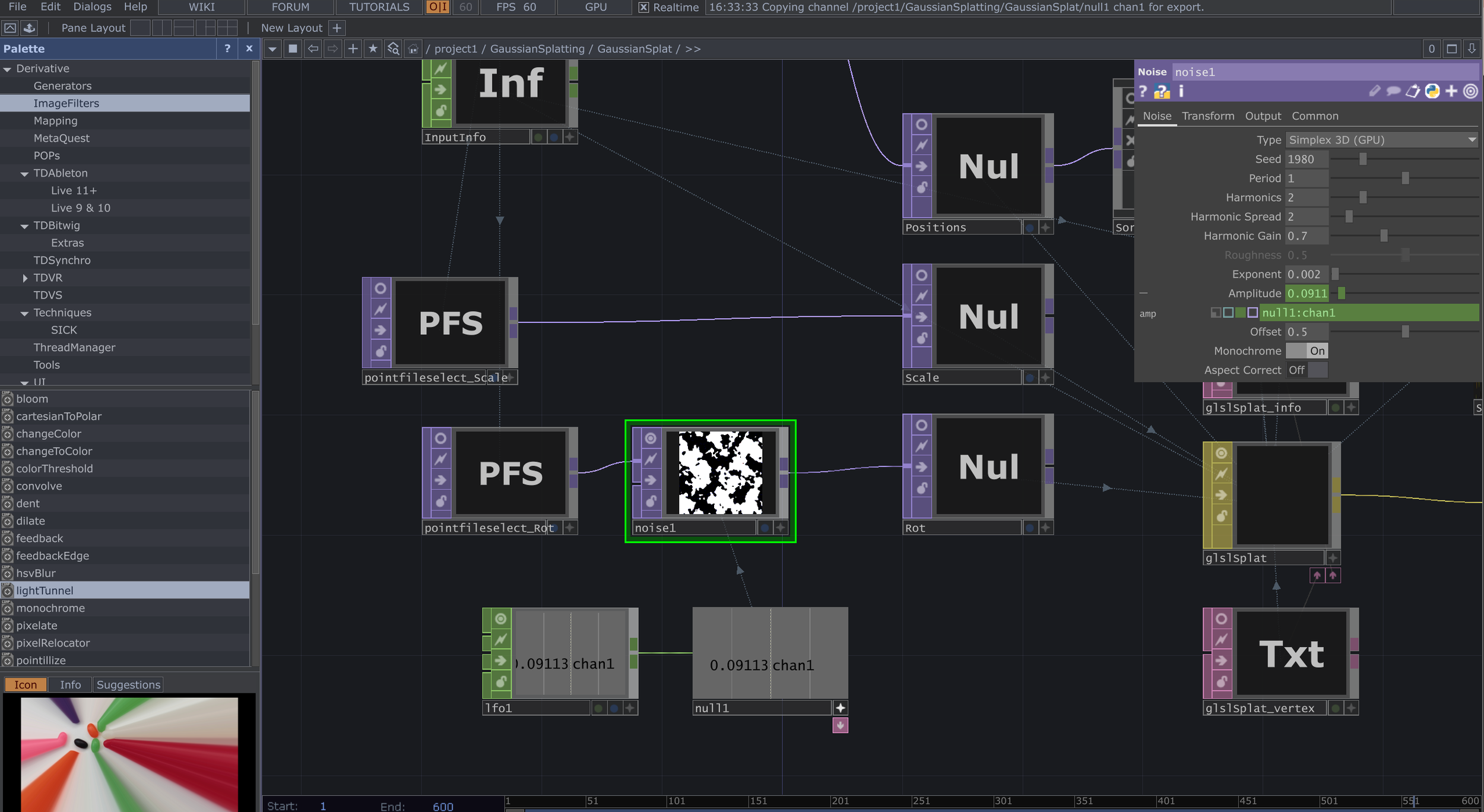

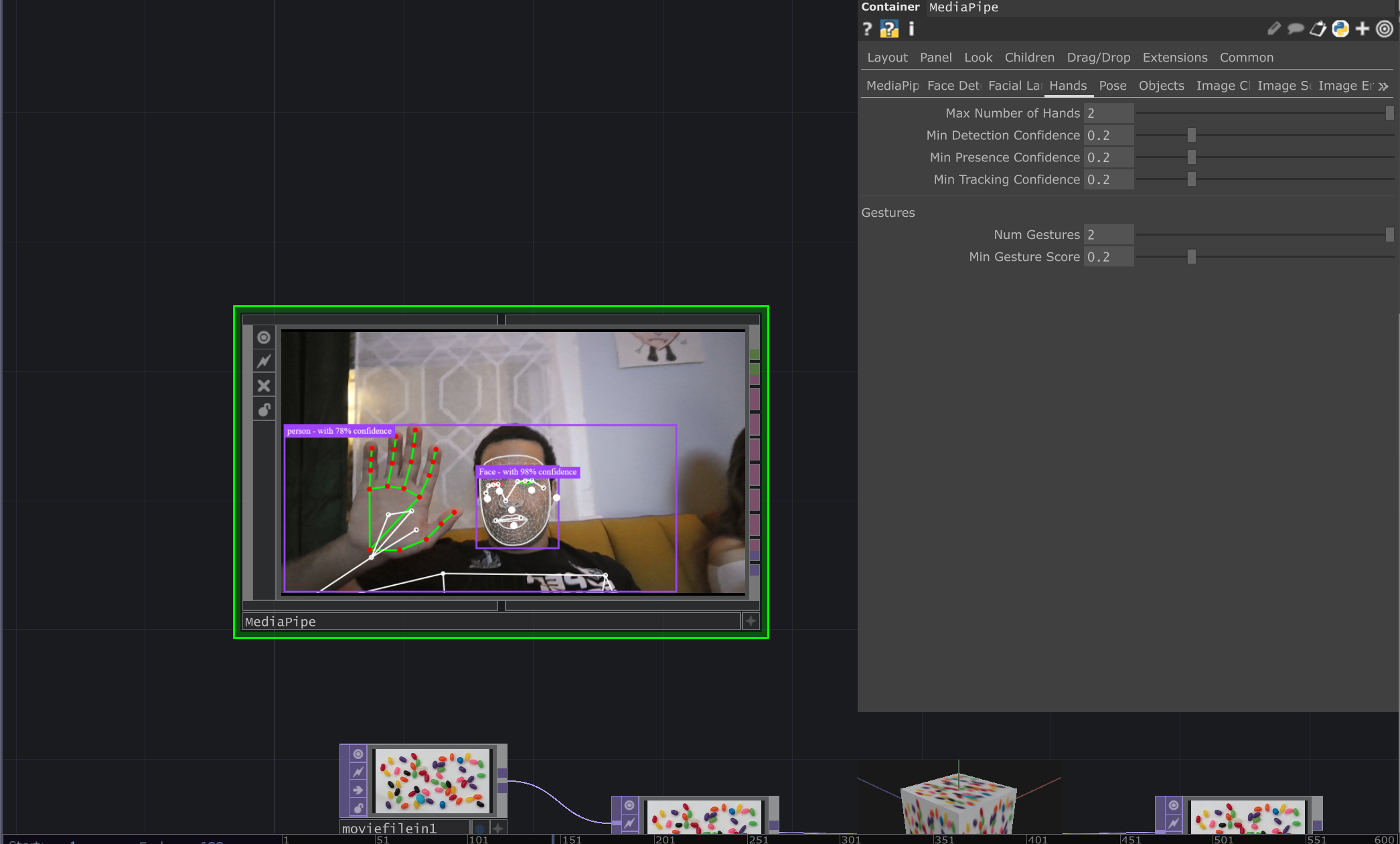

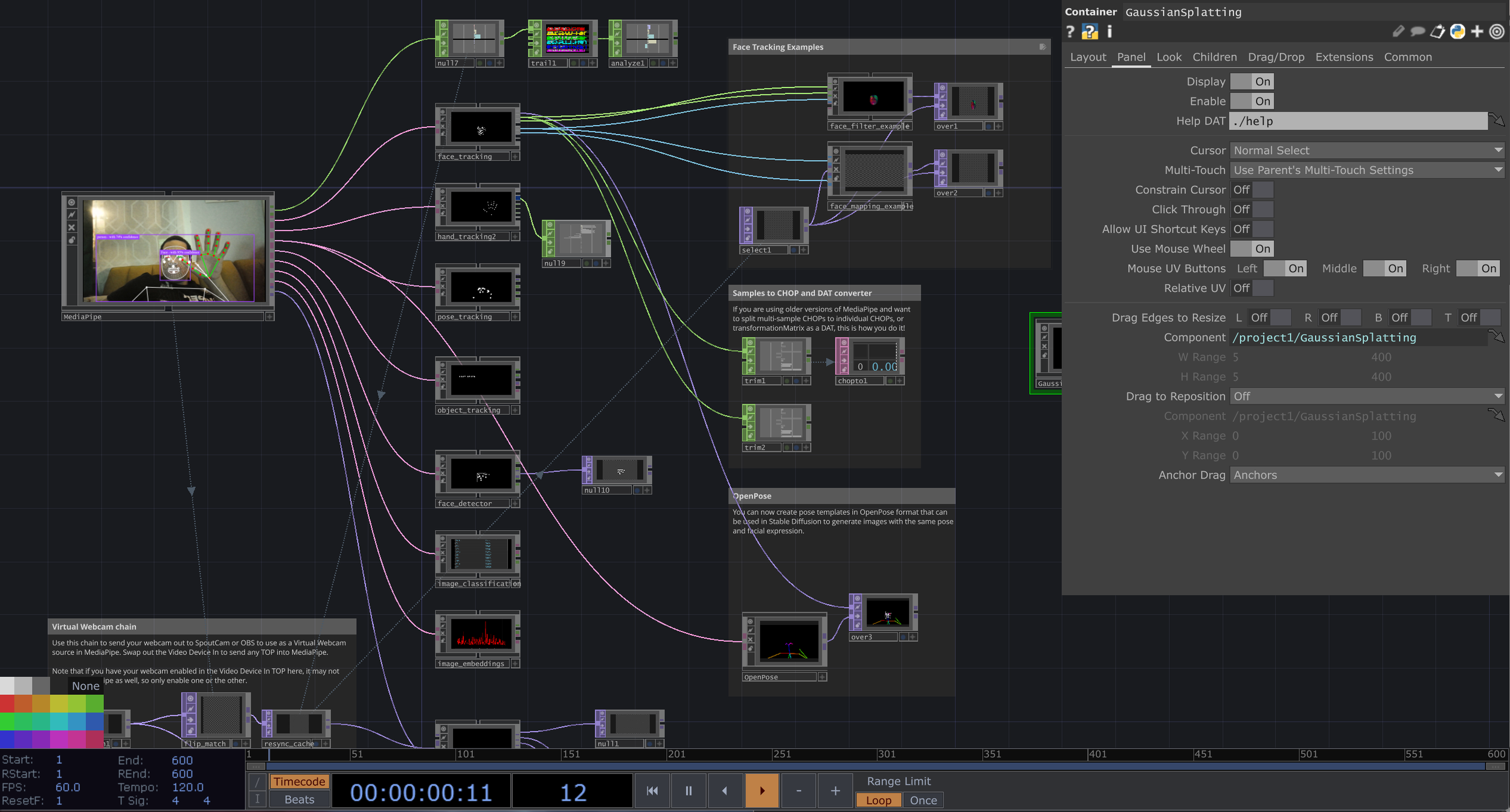

Touch Designer Gaussian Splat Integration:

Touch Designer Plugin:

https://derivative.ca/community-post/asset/gaussian-splatting/69107

More Intergration:

Plugin Internals

Rotation Noise Distortion

Free Motion Tracking Plugin:

Small Interaction:

To Do Next Week:

Primary Goals:

Implement Dynamic 3DGS

Fix Pytorch Issue with Dynamic3DGaussians repository

Compare with 4DGaussians and SpacetimeGaussians implementations

Determine which best fits your VFX pipeline needs

Motion Tracking → Gaussian Splat Transform Pipeline

Implement a system where motion tracking data controls/transforms the Gaussian splat

Test real-time camera movements affecting splat positioning and orientation

Explore whether tracked motion can drive splat deformation or camera navigation

Explore Triangular Splatting

Test triangular splatting GitHub repos

Compare rendering quality/performance vs traditional Gaussian splatting

Secondary Goals:

Continue detail capture testing with different subject matter

Test Blender integration for potential VFX compositing applications